How Does Data Center Work: Understanding Their Role Today

Ever wondered, how does data center work? These hubs power the internet by housing a synchronized array of servers and hardware that manage and disseminate data across networks. Essential are fail-safe power and tailored cooling systems, while software seamlessly governs operations. Their physical manifestation is typically a flat, rectangular form, stacked in server racks within a server cabinet to optimize both space and accessibility—reflecting the efficiency that is paramount in the world of data storage. In exploring the inner workings of data centers, we’ll reveal how these elements collaborate to keep our digital landscape active and secure – without diving too deep just yet.

Key Takeaways

- Data centers are foundational to IT operations, housing complex configurations of servers, storage systems, and network infrastructure, centralizing storage, management, and dissemination of data.

- Modern data centers offer colocation services and integrate with virtualization and cloud technologies, enhancing scalability and flexibility while implementing robust security measures both physically and digitally.

- Environmental sustainability is a critical consideration with data centers impacting water usage and energy consumption, leading to the adoption of eco-friendly solutions such as distributed cloud technologies.

Decoding Data Center Operations

Every day, countless data transactions course through the veins of the digital realm, converging in the heart of what can only be described as the engine room of the Internet: the data center. Pivotal in centralizing an organization’s IT operations, these data fortresses are responsible for storing, managing, and disseminating the information that enables e-commerce, big data analytics, and the burgeoning world of remote work. As we peel back the layers, it becomes clear that the composition of data centers is a complex tapestry of servers, storage systems, and network infrastructure, evolved from on-premises physical servers to modern, cloud-integrated powerhouses that support applications spanning across multi-cloud environments.

At the core, data center operations and data center services are a finely tuned dance of data processing and distribution, relying on a gridwork of real or virtual servers. These servers are connected through a labyrinth of networking equipment, with sophisticated software orchestrating the coordination and load distribution amongst the resources. The magic lies in the harmony of these components, working in concert to ensure the relentless flow of data that fuels our increasingly digital existence. Effective data center management is crucial in orchestrating these elements to maintain optimal performance and reliability.

The Heart of the Data Center: Servers and Storage Systems

Servers in data centers are akin to high-performance athletes, specialized and trained for the dedicated tasks they perform. Boasting more memory, faster processors, and enhanced capabilities, these servers are the workhorses that execute a myriad of applications for specific clients. Their physical manifestation is typically a flat, rectangular form, stacked in racks within a server cabinet to optimize both space and accessibility—reflecting the efficiency that is paramount in the world of data storage.

But what of the data itself? Storage systems in data centers are akin to vast libraries, holding immense volumes of data across a spectrum of formats. From file storage devices designed to house a multitude of files to block storage devices boasting many terabytes of capacity, these systems form the bedrock of our digital repository. Effective data center cooling is essential to maintain the optimal performance of these systems. It’s not just about holding data; it’s about connecting to thousands of communication networks, integrating the stored and processed data with the global digital ecosystem.

Networking Within the Data Fortress: Connectivity and Communication

In the realm of a data center, connectivity is king. The network infrastructure is a complex weave of networking devices, including:

- cables

- switches

- routers

- firewalls

Each serving a vital role in the grand tapestry of digital communication, computing and networking equipment, as well as networking and communication equipment such as routers and switches, cannot be overstated in their importance. They are the conduits that enable the seamless flow of data from the servers and storage systems to the end-user locations. This intricate setup is part of the broader data center network.

This network serves not only to connect the internal components of the data center but also to bridge the gap to the external world, ensuring uninterrupted flow and communication. The very essence of a data center’s ability to function efficiently hinges on this intricate network infrastructure, orchestrating the symphony of data that powers our digital lives.

Powering the Digital Engine: Energy Supply and Cooling Systems

The continuous operation of a data center and the availability of its services are deeply reliant on a stable and uninterrupted power supply. It’s the lifeblood that keeps the digital heart beating, and without it, the information age would grind to a halt. To combat power uncertainties, data centers are fortified with backup systems like uninterruptible power supplies (UPS) and generators. These safeguards ensure that even during the most unpredictable outages, the power supply remains unbroken, and data remains accessible. This robust setup is a testament to the importance of data center power in maintaining operational continuity.

But with great power comes great responsibility—the responsibility to cool the engines of this digital beast. Efficient cooling systems are not just a luxury; they are a necessity to prevent the catastrophic consequences of overheating. These systems have evolved to include in-rack or in-row cooling, which can be up to three times more energy-efficient, and air-side economizers that can decrease energy use by nearly 90% under optimal conditions. The Data Center Infrastructure Management (DCIM) systems play a pivotal role in optimizing cooling by matching the capacity and airflow with the IT load, potentially slashing energy costs by up to 30%.

The Anatomy of a Modern Data Center

As we delve deeper into the anatomy of the modern data center, we find a sophisticated organism, comprised of essential data center components like servers, storage systems, networking equipment, and supported by robust power and cooling infrastructure, all bound together by cabling and software. These facilities have evolved to become more than mere storage locations; they now offer colocation services, renting out space with essential amenities such as bandwidth, cooling, networking, power, and, critically, security—while clients retain management of their own IT components. This comprehensive setup is part of the broader data center infrastructure that supports efficient data center management. The modern data center is a fortress, with security measures designed to protect the critical and proprietary nature of the assets they house, ensuring the sanctity of data and the continuity of services.

Yet, security is not the only cornerstone of a modern data center. Virtualization and cloud integration have extended the boundaries of these facilities, allowing for applications and workloads to be run across pools of physical infrastructure, integrating seamlessly into multi-cloud environments. The DCIM software stands as the linchpin in this evolution, enabling data center managers to maintain an accurate representation of IT equipment and thereby enhancing the synergy between virtual and physical resources. This evolution not only enhances the capacity and flexibility of data centers but also represents a leap forward in the way we manage and interact with the digital world.

A Secure Foundation: Physical Infrastructure and Security Systems

In the world of data centers, security is paramount, and it extends far beyond the digital realm into the physical. A modern data center employs multiple layers of security measures, such as:

- Sophisticated alarms

- Biometric scanners

- Gates

- Security doors

- A contingent of on-site security personnel

All working together to form an impenetrable physical barrier. Access control protocols are a testament to the seriousness with which these facilities take their role as custodians of sensitive information. Regular access reviews and adjustments ensure that permissions remain current, with deprovisioning for those no longer needed, and bolstered by the use of electronic door locks and biometric scanners.

The presence of security personnel, 24/7 video surveillance, and environmental controls contribute to the continuous monitoring and protection of the data center, creating a fortress that is as secure physically as it is digitally. It is this comprehensive approach to data center security within the physical facility that provides the assurance necessary for enterprises to entrust their most valuable digital assets to data center facilities. This robust data center security ensures that both physical and digital threats are mitigated effectively.

Virtualization and Cloud Integration: Extending Data Center Resources

The march of progress in data centers is marked by the shift from traditional servers to a virtualized network, a transformation that has redefined the landscape of IT infrastructure. Virtualization has revolutionized the way applications and workloads are deployed, freeing them from the constraints of physical hardware and allowing them to operate across a collective pool of resources that can span multiple cloud environments. This integration with the cloud is not merely a trend; it is a strategic imperative that enhances the capacity and flexibility of data center resources, allowing for a level of scalability previously unattainable.

Data center resources are now more efficiently utilized, leading to cost savings and improved performance.

To achieve this integration, DCIM software has become critical, providing the tools needed for data center managers to bridge the gap between virtual and physical infrastructure. Through DCIM optimization, data centers can maximize efficiency and adaptability, creating a dynamic environment that can respond swiftly to the ever-changing demands of the digital age.

Tiers of Reliability: Understanding Data Center Tiers

Data centers are not created equal, and understanding the types of data centers, such as the four data center tiers, classified by esteemed entities such as The Uptime Institute, serve as a testament to this truth. These tiers range from Tier 1 to Tier 4, each indicating a level of expected service uptime and reliability based on a set of predetermined criteria including: data center tiers.

- power

- cooling

- maintenance protocols

- redundancy

- fault tolerance

It’s a scale that directly influences the expected service uptime, with Tier 4 data centers boasting an impressive uptime of 99.995% and lower tiers exhibiting progressively more expected downtime.

Tier 1 to Tier 4: From Basic Facilities to Fault Tolerant Fortresses

At the initial rung of this hierarchy, Tier 1 data centers offer a basic capacity with dedicated cooling equipment and UPS, but lack redundancy features, making them vulnerable to a higher expected downtime of 28.8 hours per year. As we ascend, Tier 2 data centers introduce partial redundancy in components, supporting a marginally higher uptime suitable for small to medium enterprises. The leap to Tier 3 is marked by concurrently maintainable systems that allow for maintenance activities without necessitating shutdowns, thereby significantly enhancing uptime with only 1.6 hours of expected downtime per year.

However, it is the pinnacle of this classification, the Tier 4 data centers, that embody the concept of fault tolerance, with full redundancy across all components and no single point of failure. These fortresses of reliability guarantee a maximum annual downtime of a mere 26.3 minutes, setting the gold standard for data center operations.

The Distributed Cloud: A Sustainable Shift in Data Management

The distributed cloud model offers several benefits, including:

- Addressing environmental sustainability concerns by leveraging data storage and management across multiple geographically dispersed locations

- Balancing on-premises control and the scalability of public cloud services

- Providing geographical flexibility, aiding in compliance with data localization laws

- Reducing dependency on single vendors

This new era in data storage and management is a paradigm shift that offers a more responsible approach to the industry. Praised for enhancing data center design and addressing environmental concerns, the distributed cloud is an innovative approach that:

- Aligns with the increasing awareness of the need for sustainable data management practices

- Supports the technological demands of the present

- Embraces the environmental responsibilities of the future.

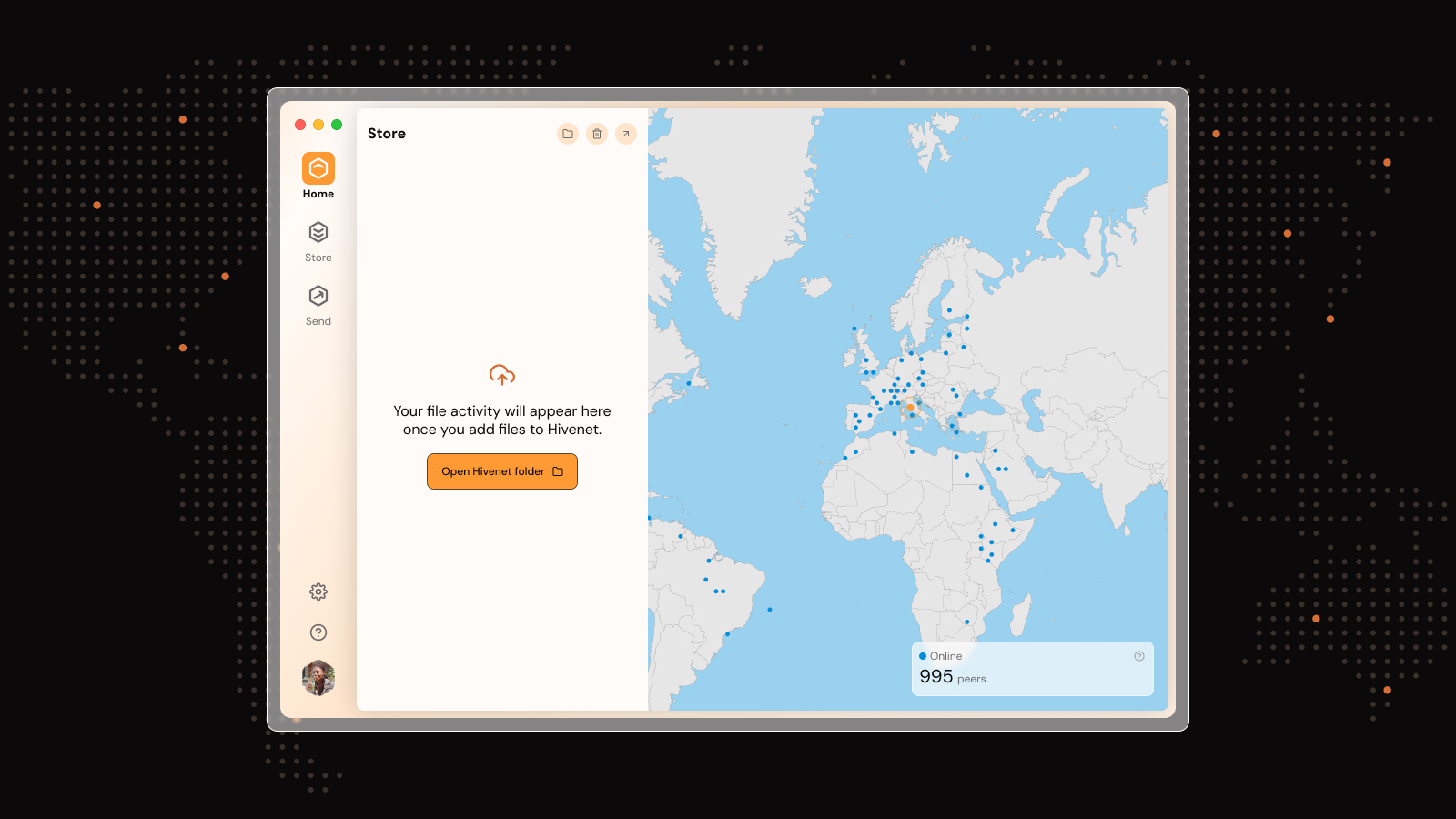

Hivenet: Pioneering the Distributed Cloud Revolution

Leading the charge in the distributed cloud revolution is Hivenet, a platform that champions environmentally responsible practices within the data center industry. Accessible at hivenet.com, Hivenet embodies a community-focused ethos, leveraging contributions from users to build a secure and sustainable network that reflects a collaborative vision for the future of cloud technology. With services such as hiveDisk, Hivenet provides a sustainable and secure solution for cloud storage, placing environmental consciousness and user privacy at the forefront.

As a standard-bearer for distributed cloud solutions, Hivenet stands as a beacon of innovation, driving the industry towards a future where data centers can operate in harmony with the environment. It is the embodiment of a collective effort to mitigate the environmental impact of traditional data storage and management infrastructure.

The Environmental Impact: A Critical Look at Data Centers

It’s an inconvenient truth that data centers, the very pillars of our digital society, come at a considerable environmental cost. The substantial energy and water requirements necessary to maintain their operations have become a focal point for sustainability concerns. The design and management of data center infrastructure play a crucial role in addressing these issues. This voracious consumption is not without consequence; globally, data centers account for over 4.3 trillion cubic meters of water annually, a figure that contributes to water scarcity and pollution challenges.

Conventional data centers, with their reliance on water-based cooling systems, are particularly culpable, with significant water loss through evaporation and the constant need to maintain water quality.

The Cost of Computing: Assessing Energy and Water Usage

The energy-intensive nature of data centers is well-documented, with facilities running non-stop to provide the uninterrupted access to information that society demands. The water usage of major players like Google and Microsoft, consuming billions of gallons annually, underscores the magnitude of the issue. Even when compared to Amazon’s AWS, which uses a notably lower amount of water, it becomes clear that the industry standard for water usage remains high and is ripe for reevaluation. Assessing the efficiency of these behemoths, metrics like Power Usage Effectiveness (PUE) suggest that there is significant room for improvement, with most data centers operating at PUE levels that indicate an opportunity for better energy efficiency.

In the quest for sustainability, the distributed cloud model shines as a beacon of hope, its emphasis on reduced power consumption serving to lessen the carbon footprint of data management. This innovative approach not only aligns with the growing societal call for environmental stewardship but also presents an opportunity for the data center industry to redefine its legacy.

Greener Alternatives: Embracing Eco-Friendly Data Solutions

In the face of these environmental challenges, greener alternatives have emerged, offering a path to a more sustainable future for data management. Some of these alternatives include:

- Distributed cloud technologies, such as those pioneered by Hivenet, which have demonstrated the potential to achieve a significant reduction in carbon footprint, with reductions as high as 77%.

- Strategies that encourage the reuse of existing resources and contribute to a decrease in both carbon emissions and the volume of electronic waste generated.

- Solutions like Hivenet that allow users to contribute their own storage space, lowering overall energy usage and reducing storage-related expenses.

These alternatives are helping to create a more sustainable future for data management.

The distributed cloud, therefore, represents a sustainable alternative that can mitigate the environmental costs associated with traditional data center infrastructure. It is an approach that not only addresses the immediate needs of data management but also takes into consideration the long-term health of our planet.

Evolving Landscapes: The Future of Data Centers and Cloud Computing

As we peer into the horizon of technological advancement, the future of data centers is shaped by a growing demand for cloud services and the consequent expansion of hyperscale facilities. Companies like Amazon, Meta, and Google are at the forefront, driving the need for vast IT infrastructure capable of hosting an immense array of servers and data storage solutions. The data center market is on a trajectory of significant growth, projected to rise from $25.8 billion in 2023 to an estimated $81.2 billion by 2030. Innovations like artificial intelligence, blockchain, and the nascent metaverse are set to further increase capital spending on data centers, surpassing $500 billion by 2027.

Edge computing represents another frontier, transforming data centers by situating compact facilities closer to data sources and end users. This proximity enables:

- Low-latency connections

- Minimizing communication delays

- Forming an integral part of modern data center facilities that extend their reach to edge locations.

The evolving landscape of multiple data centers, cloud data centers, and cloud computing is one of dynamic growth and innovation, constantly adapting to meet the insatiable demands of a data-driven world. Data center services are becoming increasingly vital in this rapidly changing environment.

Navigating Challenges: Best Practices for Data Center Efficiency

In navigating the complex landscape of data centers, efficiency remains a paramount challenge. Some strategies to improve efficiency include:

- Using energy-efficient Uninterruptible Power Supply (UPS) systems and eco-mode operations

- Implementing high-efficiency Power Distribution Units (PDUs)

- Adopting data center design strategies such as the hot aisle/cold aisle configuration

These measures can significantly reduce energy costs and cooling-related energy consumption. Effective data center management is crucial in implementing these strategies. Green data center solutions that prioritize energy-efficient design are not only environmentally sound but also economically beneficial, as they minimize operational costs and environmental impact.

Further to this, server power management features and the adjustment of humidification levels for IT equipment can lead to notable energy savings. By merging underutilized servers and employing data deduplication, data centers can achieve significant savings in energy, licensing, and maintenance costs. Predictive maintenance, which uses analytics and intelligent monitoring to anticipate maintenance needs, supports energy conservation and enables strategic planning for future growth. These best practices illustrate the industry’s commitment to optimizing data center operations in an environmentally and economically responsible manner.

Comprehensive Overview of Data Centers: Functionality, Efficiency, and Future Trends

In the vast digital landscape, data centers emerge as critical hubs that facilitate our everyday interactions, business transactions, and consumption of digital content. From the basic operations involving servers and storage systems to the complex networking that connects us all, data centers are the linchpins of the digital age. Embracing the tiers of reliability and the innovative shifts toward distributed cloud computing, particularly through pioneers like Hivenet, we see a concerted effort to align data management with sustainability. As the industry evolves and expands, it continues to embrace best practices for efficiency, ensuring that our digital future is both resilient and responsible. The data center, in all its complexity and necessity, stands at the forefront of technological progress, shaping the future of our increasingly interconnected world.

Frequently Asked Questions

What is the primary role of a data center?

The primary role of a data center is to centralize an organization's IT operations by storing, managing, and disseminating data, supporting essential services and enabling big data analytics and remote work.

How do modern data centers differ from older ones?

Modern data centers differ from older ones by prioritizing security, reliability, virtualization, and integration with cloud services for enhanced flexibility and scalability.

What are data center tiers, and why are they important?

Data center tiers are a classification system that indicate a data center's expected service uptime and reliability. They are important because they help organizations choose the right level of service for their needs.

How does the distributed cloud model contribute to sustainability?

The distributed cloud model contributes to sustainability by reducing the environmental impact of traditional data centers through efficient data storage and management across multiple locations, resulting in significant reductions in energy and water usage. This aligns data management with environmental sustainability.

What are some best practices for improving data center efficiency?

To improve data center efficiency, consider using energy-efficient UPS systems, implementing hot aisle/cold aisle configurations, employing data deduplication, adjusting IT equipment's humidification levels, and using predictive maintenance to anticipate and address maintenance needs. These practices can help optimize your data center's performance and reduce energy consumption.

Liked this article? You'll love Hivenet

You scrolled this far. Might as well join us.

Secure, affordable, and sustainable cloud services—powered by people, not data centers.