Embracing P2P: All about peer-to-peer technology and how it harnesses decentralized storage and scalability

KEY TAKEAWAYS

- In a peer-to-peer network, all computers are created equal, and cooperate directly between themselves to deliver a service. No intermediary, no centralized server.

- In a peer-to-peer network, there could be millions of computers. They are powered by very efficient and scalable algorithms, that don’t deteriorate the service as the size of the network grows, and as nodes become overloaded or leave the network.

- Hivenet's storage service relies on these core peer-to-peer principles and on the important free capacities sitting in our personal devices at the edge of the network. In the years to come, it is inevitable that computation and storage will move away from centralized.

The evolution of file sharing: P2P technology and its pioneers

Napster, Gnutella, Kazaa, BitTorrent, we have been sharing files with tools on the Internet for a while... Did you know that they rely on peer-to-peer (p2p) computing technology?

In a peer-to-peer network, all computers are created equal, are endowed with the same rights, and cooperate directly between themselves to deliver a service. No hierarchy, no intermediary, no orchestra conductor.

In a peer-to-peer infrastructure, users share resources through direct exchanges between computers, which are called “nodes”. The data is distributed among the nodes instead of being sent to servers for processing. Unlike in client-server computing technology, each node plays a symmetric and autonomous role to provide to the end user the expected solution.

Core characteristics of peer-to-peer systems

The complexity of design and use of peer-to-peer systems allow them to have their own characteristics:

- Symmetric, distributed and decentralized:

All the nodes play a similar role, acting as both client and server. They fetch, distribute, and process content.

- Dynamic participants:

Peer-to-peer systems must be resilient to nodes joining and leaving, whereas a centralized system expects its servers to remain up at all times.

- Resource localization:

One of the key challenges in a p2p network is to find the peer hosting the requested data. A well-known technique is to use a Distributed Hash Table (DHT), which is a decentralized, distributed system that provides a lookup service similar to a hash table. One of the most cited papers on this topic is "Kademlia: A Peer-to-peer Information System Based on the XOR Metric" by Petar Maymounkov and David Mazieres. This paper introduces the Kademlia DHT, which has become a widely-used DHT in various peer-to-peer applications. These algorithms are very efficient and scalable even with a large number of nodes and resources.

- Rebalancing and replication:

As nodes become overloaded, or leave the network the peer-to-peer system must ensure that services remain accessible, available, performant and data remains persistent.

- Scalability and security:

In a peer-to-peer network there could be millions of nodes. Skype at its peak had over 300M users. Such networks must have irreproachable security tools which don't deteriorate as the size of the network grows.

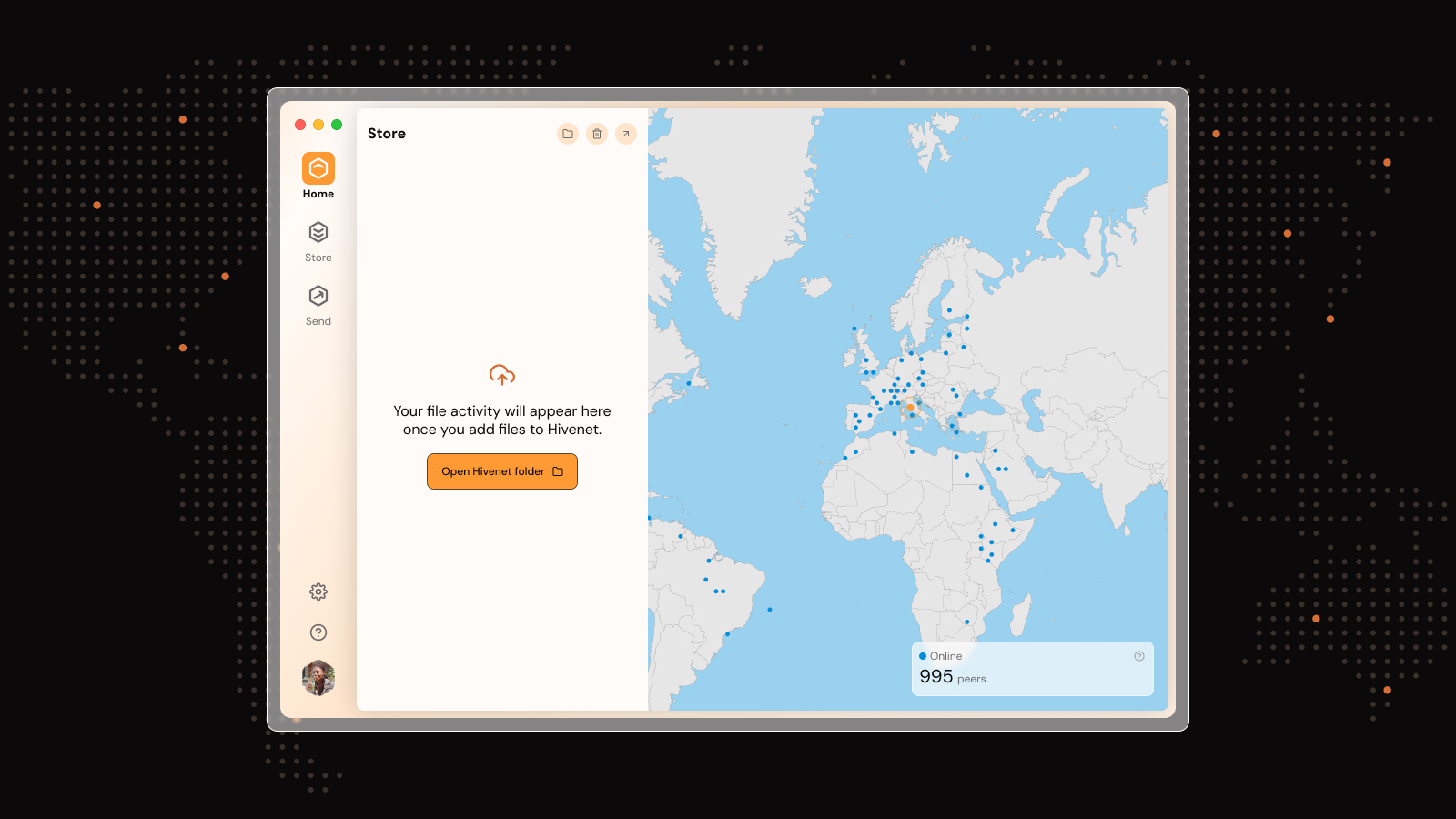

Hivenet's P2P storage: A new paradigm for data management

Hivenet's peer-to-peer storage relies on these core principles. It is based on the open source IPFS protocol for the core filesystem layer. But it is more than, on top of which we have built additional services and features to provide:

- End-to-end encryption:

No private data leaves the end users' device in clear form. The encryption model enables the sharing of data across multiple participants without replicating the content, nor sharing keys.

- Proof of content integrity:

As participants in the Hivenet network store other's data, they are incentivized to do so as long as they continue providing proof of content integrity.

- Location awareness:

Hivenet's peer-to-peer placement algorithms take into account the user's privacy requirements and preferred locations for both data storage and processing.

- Error correction:

When nodes suddenly go offline, the data they hold is no longer available. When such an event occurs Hivenet will recreate the lost data and distribute it to other nodes to ensure durability of the stored files.

The rising potential of peer-to-peer technologies

One may wonder why peer-to-peer technologies which are mature since the mid 2000s aren't more in use. Well they are omnipresent already in gaming, crypto world, and content distribution. Windows 10 updates are distributed using peer to peer technologies.

But it is only recently that the technology environment evolution has aligned all the stars for peer-to-peer to reach its full potential:

- Fiber is now in many countries more common than DSL, which has brought symmetry between upload and download speeds.

- Data is now produced at the edge more than ever; IOT devices have outgrown non IOT devices.

- There is more power and capacity than ever in edge devices that are growing to be billions...

The future of decentralized storage and computing

In the years to come, it is inevitable that computation and storage will logically move away from centralized, distant servers to distributed systems closer to the end users. The amount of data produced and stored in the Internet is massive and growing by approximately 20% every year. The world's data storage capacity is expected to reach 13 ZB by 2024, vs. 6.8 ZB today. As an alternative to huge data centers for storing all this data, Hivenet's peer-to-peer storage system will rely on the important free capacities sitting in our personal devices at the edge of the network.

Liked this article? You'll love Hivenet

You scrolled this far. Might as well join us.

Secure, affordable, and sustainable cloud services—powered by people, not data centers.