Traditional cloud storage and its data center energy consumption are unsustainable

Tech has increased connectivity and convenience. Yet, behind every click, stream, and upload lies a significant environmental cost: data centers. As shown in the visual below from Visual Capitalist, the world has about 11,800 data centers, with the United States alone housing nearly half (5,381). This concentration of data centers poses a major environmental threat due to their massive energy consumption. To mitigate this, many data centers are focusing on energy efficiency and transitioning to renewable energy sources, such as solar and wind, to reduce their carbon footprint and enhance sustainability.

How do data centers work?

Data centers store, process, and distribute data. Every online action (streaming a video, sending an email, or using cloud services) relies on them. These facilities require continuous power to keep servers operational and at optimal temperatures, often depending on traditional energy sources that contribute to emissions. The significant electricity consumption of data centers highlights the importance of managing energy usage to enhance environmental sustainability.

Beyond power consumption, data centers require extensive cooling systems that use vast amounts of water. Some of the largest facilities consume millions of gallons of water daily, depleting local supplies. Additionally, land use for these centers can lead to deforestation and habitat destruction. Dynamic resource allocation in cloud storage models can enhance scalability in public clouds. Still, it also raises concerns regarding energy efficiency, as it can lead to periods of idle resources that consume energy without being utilized.

The rapid expansion of data centers in the U.S.

With nearly half of the world’s data centers, the U.S. dominates cloud infrastructure. In recent years, major tech companies like Microsoft, Amazon, and Google have poured billions into building more data storage and computing facilities to support AI, cloud computing, and big data. Google Cloud, for instance, has been at the forefront of sustainability initiatives, aiming to reduce the environmental impact of its operations.

This surge in demand comes at a cost: rising energy consumption. For example, a single AI-driven query uses 10 times more electricity than a basic internet search. The growing reliance on AI-powered applications, blockchain, and digital services is overloading energy grids. Green cloud computing offers a solution by promoting strategies to optimize operations, increase energy efficiency, and reduce the overall carbon footprint.

According to Goldman Sachs Research, data centers are on track to become the largest driver of electricity demand in the U.S. By 2030, they are expected to consume 8% of the country’s total electricity, up from 3% in 2022. This unsustainable trend highlights the need for alternative models.

The carbon footprint of traditional cloud computing

Data centers consume massive amounts of electricity, primarily from fossil fuels, leading to high carbon emissions. Cooling systems require large amounts of water and power. As demand grows, the environmental consequences worsen. Emphasizing energy efficiency in cloud and data center operations can significantly reduce electricity consumption and mitigate these environmental impacts.

Additionally, data centers contribute to electronic waste (e-waste). As servers and equipment become outdated, they are discarded, creating hazardous waste. Components often contain toxic substances like lead, mercury, and cadmium, which pollute the environment. Transitioning to renewable energy sources, such as solar and wind, can further reduce the environmental impact of data centers and enhance their sustainability.

E-Waste and Resource Inefficiency

The rapid growth of cloud computing has led to a significant increase in electronic waste (e-waste) and resource inefficiency. The production and disposal of electronic devices, such as servers and data storage equipment, contribute to the growing problem of e-waste. According to the UN's Global E-waste Monitor 2020, the world generated 53.6 metric tons of e-waste in 2019, with only 17.4% being properly recycled.

Resource inefficiency is another significant issue in traditional cloud computing. The use of centralized data centers leads to energy consumption and greenhouse gas emissions. In contrast, distributed cloud storage solutions like Hivenet’s can reduce energy consumption by up to 77% and minimize e-waste by utilizing existing resources.

Why the current cloud model cannot sustain future demand

The traditional data center model is reaching its limits. It centralizes data, creating security vulnerabilities, failure points, and resource inefficiencies. As data demands grow, a more scalable, efficient, and eco-friendly alternative is essential.

Dynamic resource allocation in cloud storage models can address resource inefficiencies by adjusting resources based on demand. However, it also raises concerns regarding energy efficiency, as idle resources may consume energy without being utilized.

Large cloud providers also dominate the industry, raising concerns over data sovereignty and user privacy. A decentralized approach would distribute control, enhance security, and promote sustainability.

The future of cloud computing: distributed cloud infrastructure

What is distributed cloud infrastructure?

Unlike traditional data centers, distributed cloud infrastructure operates through decentralized networks. This system uses existing computing resources worldwide, reducing reliance on centralized storage hubs.

Green cloud computing plays a crucial role in promoting sustainability by optimizing operations, increasing energy efficiency, and reducing the overall carbon footprint of cloud services.

Instead of storing and processing data in massive buildings, distributed networks spread workloads across multiple devices. This approach significantly reduces the environmental footprint.

Benefits of distributed cloud infrastructure

- Lower environmental impact: Uses existing resources, reducing the need for new infrastructure and energy use. Distributed cloud infrastructure also enhances energy efficiency by optimizing resource usage and leveraging renewable energy sources.

- Increased security and resilience: Decentralization eliminates single points of failure and strengthens data security.

- Cost efficiency: Reduces expenses for providers and users by leveraging underutilized computing power.

- Scalability: Expands naturally as more users contribute resources without requiring new facilities.

- Less electronic waste: Extends device lifespans and decreases demand for new server hardware.

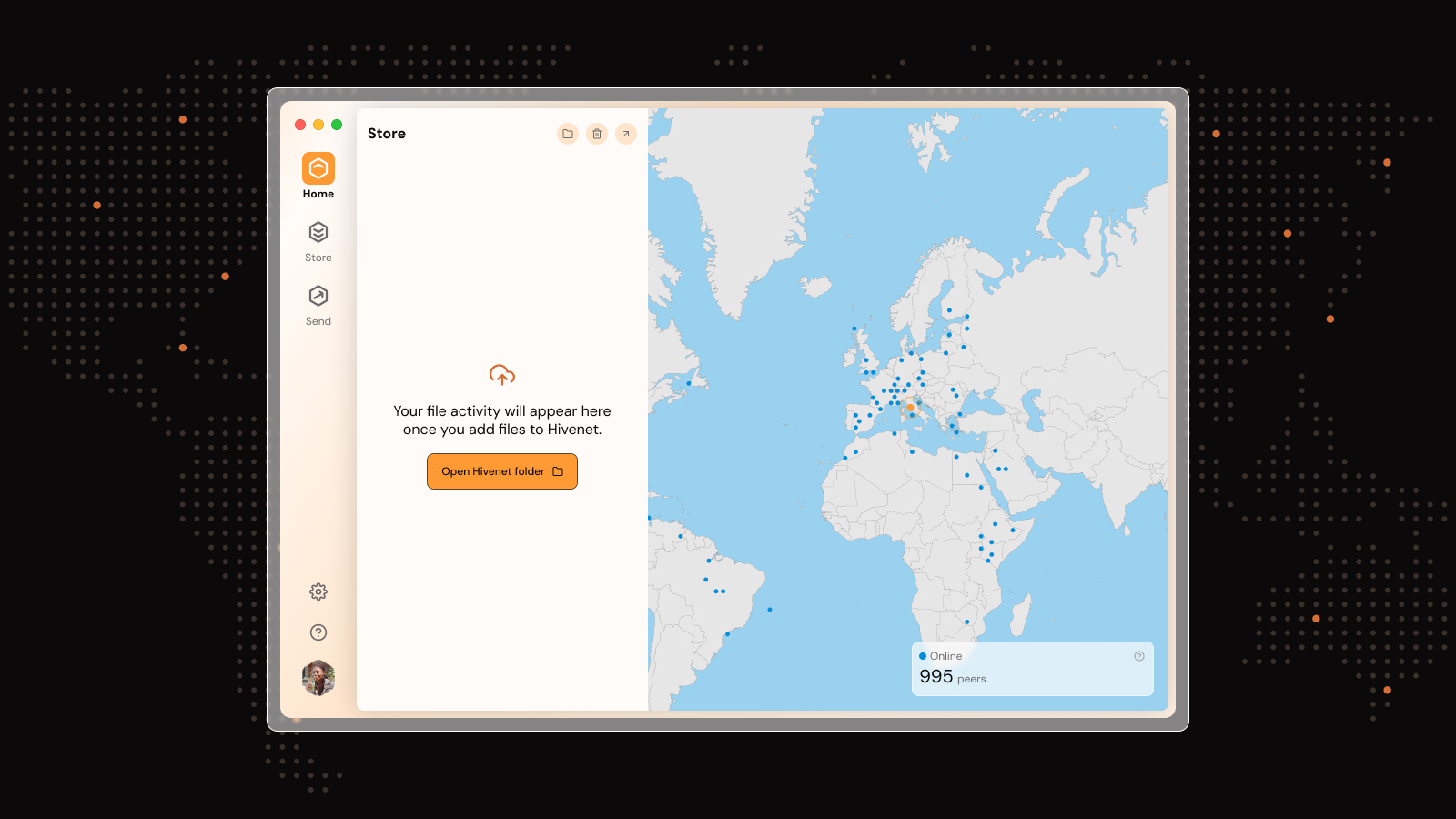

How Hivenet is pioneering sustainable cloud computing

Hivenet’s distributed cloud model provides an alternative to traditional data centers. By allowing users to contribute unused computing power, Hivenet reduces the need for large-scale infrastructure. Additionally, Hivenet leverages renewable energy sources to power its operations, significantly reducing its environmental impact.

Hivenet’s network includes encryption, redundancy, and self-healing features to ensure security and reliability. This decentralized model provides cloud computing benefits while avoiding the environmental costs associated with traditional data centers.

Why the future of cloud computing must be decentralized

Reducing carbon emissions with distributed cloud solutions

Lowering the energy consumption of data centers is essential in tackling climate change. Distributed cloud models like Hivenet significantly reduce power use and reliance on fossil fuels.

Energy usage in data centers is a critical concern due to their significant electricity consumption and its impact on environmental sustainability. Distributed cloud solutions can optimize energy usage by leveraging efficient energy management practices and technologies, such as AI and machine learning, to reduce carbon emissions.

Expanding cloud accessibility

A decentralized system broadens access to cloud computing, allowing users in underserved regions to benefit from high-quality digital services without requiring local data centers.

Ensuring long-term data sustainability

As global data storage needs increase, distributed cloud infrastructure offers a sustainable, flexible, and cost-effective solution. Compared to traditional data centers, decentralized networks provide better long-term viability.

Frequently asked questions (FAQ)

How much energy do traditional data centers use?

Data centers currently consume around 1% of global electricity and are projected to account for 8% of U.S. electricity demand by 2030.

How does distributed cloud computing save energy?

By using existing computing power instead of building new infrastructure, distributed cloud models eliminate the need for additional power-intensive data centers, reducing energy consumption.

Is decentralized cloud computing secure?

Yes. With encryption, redundancy, and data fragmentation, distributed networks reduce security risks by eliminating single points of failure.

Can individuals participate in a distributed cloud network?

Yes! Anyone with unused computing power can join a distributed cloud network like Hivenet and benefit from lower costs and enhanced security.

Why should businesses consider a distributed cloud model?

Companies can cut expenses, improve data security, and support sustainability by using distributed cloud computing instead of relying on centralized providers.

Liked this article? You'll love Hivenet

You scrolled this far. Might as well join us.

Secure, affordable, and sustainable cloud services—powered by people, not data centers.