Creating reliable file systems in P2P networks with unreliable nodes

Is it possible to provide a secure and reliable service using insecure and unreliable nodes? At first glance, building a reliable system from unreliable components seems counterintuitive. However, by leveraging redundancy, forward error correction, and intelligent node behavior modeling, it is possible to create a secure and reliable P2P service using unreliable nodes.

The role of redundancy in critical systems

Critical systems, such as airplanes or spacecraft, cannot afford failure. To ensure reliability, these systems rely on the engineering concept of redundancy – the duplication of critical components. Fortunately, peer-to-peer (P2P) systems are composed of thousands or millions of similar nodes, which can be easily duplicated, despite their unreliability.

Forward error correction for optimized storage

Error correction codes, such as Reed Solomon coding, were invented in the 1950s to control and repair errors over noisy communication channels. These codes add redundancy to the transmitted information, allowing for error correction. Similar strategies, like RAID-6, are used in disk drives to enhance reliability.

Applying redundancy strategies to Hivenet's P2P file systems

In Hivenet's P2P file systems, files are split into shards of data and spread across the network. Additional shards are created to account for nodes disappearing or hardware failures. For example, let's assume 100 encrypted shards are generated from your file and sent to 100 peers. These are generated in such a way that only 70 are needed to rebuild the original file. Missing shards are regenerated as soon as we discover nodes leaving. With only 30% overhead, the probability of not being able to access the content is significantly lower compared to simple replication strategies.

Modeling node behaviors for optimal placement

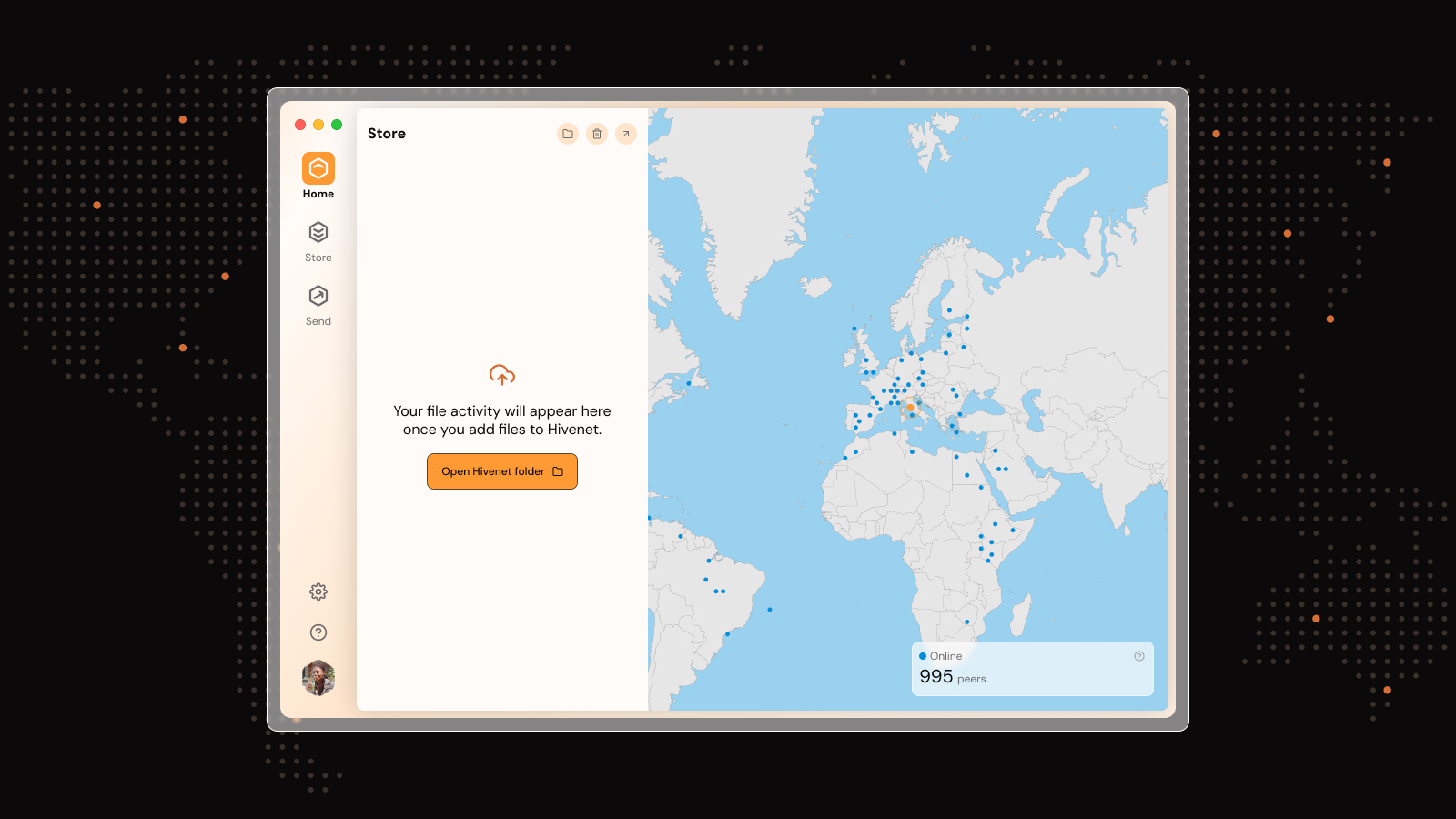

Nodes in the Hivenet P2P network all have the same role but behave differently. The computer in your bedroom is turned off every night, but your NAS is on 24/7. Usage patterns and availability differ over the day and vary between nodes and across geographies. Hivenet learns the behavior of each node and provides optimal placement to ensure sufficient available nodes when data is needed.

Ensuring data persistence and privacy

Data persistence and accessibility protection are vital in P2P systems. Nodes may experience hardware failures and remain unavailable forever. Nodes that don't respond to proof of storage for some time or for whom unavailability has been reported for a long time will be marked as a failed node. The P2P network will start reconstructing its data elsewhere. To protect data privacy, data is encrypted before it leaves the user's device. Neither Hivenet nor any of the nodes storing this data will be able to decrypt the content.

Harnessing unreliable nodes for a secure and reliable P2P service

By understanding and implementing redundancy, forward error correction, and intelligent node behavior modeling, it is possible to create a secure and reliable P2P service using unreliable nodes. Hivenet's P2P file system is a prime example of how these strategies can be effectively combined to ensure data persistence, accessibility, and privacy in a decentralized network.

Liked this article? You'll love Hivenet

You scrolled this far. Might as well join us.

Secure, affordable, and sustainable cloud services—powered by people, not data centers.