Ensuring data reliability in P2P networks: Strategies for success

Understanding system reliability and the role of redundancy

The reliability of a system, such as its Mean Time Between Failures (MTBF), is often calculated by aggregating the reliability of its components. However, relying on intermittent or unreliable elements may not be the best idea for building a dependable service. Critical systems that cannot afford failure, like airplanes or spacecraft, are constructed with redundancy in mind—duplicating critical components to enhance reliability.

The benefits of peer-to-peer systems

Peer-to-peer (P2P) systems consist of thousands or millions of similar peers, which may be unreliable but easily duplicated. Simple duplication can be costly, requiring a file to be duplicated on multiple peers for increased availability. Thankfully, more optimal strategies exist for ensuring reliability in P2P networks:

Utilizing forward error correction in P2P storage

Error correction codes, such as Reed Solomon coding, were designed in the 1950s to control and repair errors in noisy communication channels. Redundancy is added to the transmitted information through additional data. RAID-6 for disk drives employs similar redundancy strategies.

In Hivenet's P2P file storage system, files are split into shards of data distributed across the P2P network. Additional shards are created to account for disappearing peers or content destroyed by hardware failures. For example, 100 encrypted shards generated from your file and sent to 100 peers may only require 70 to rebuild the original file. Missing shards are regenerated when peers leave. With only 30% overhead, the probability of not being able to access the content is significantly lower compared to simple replication.

Modeling node behaviors for optimal data distribution

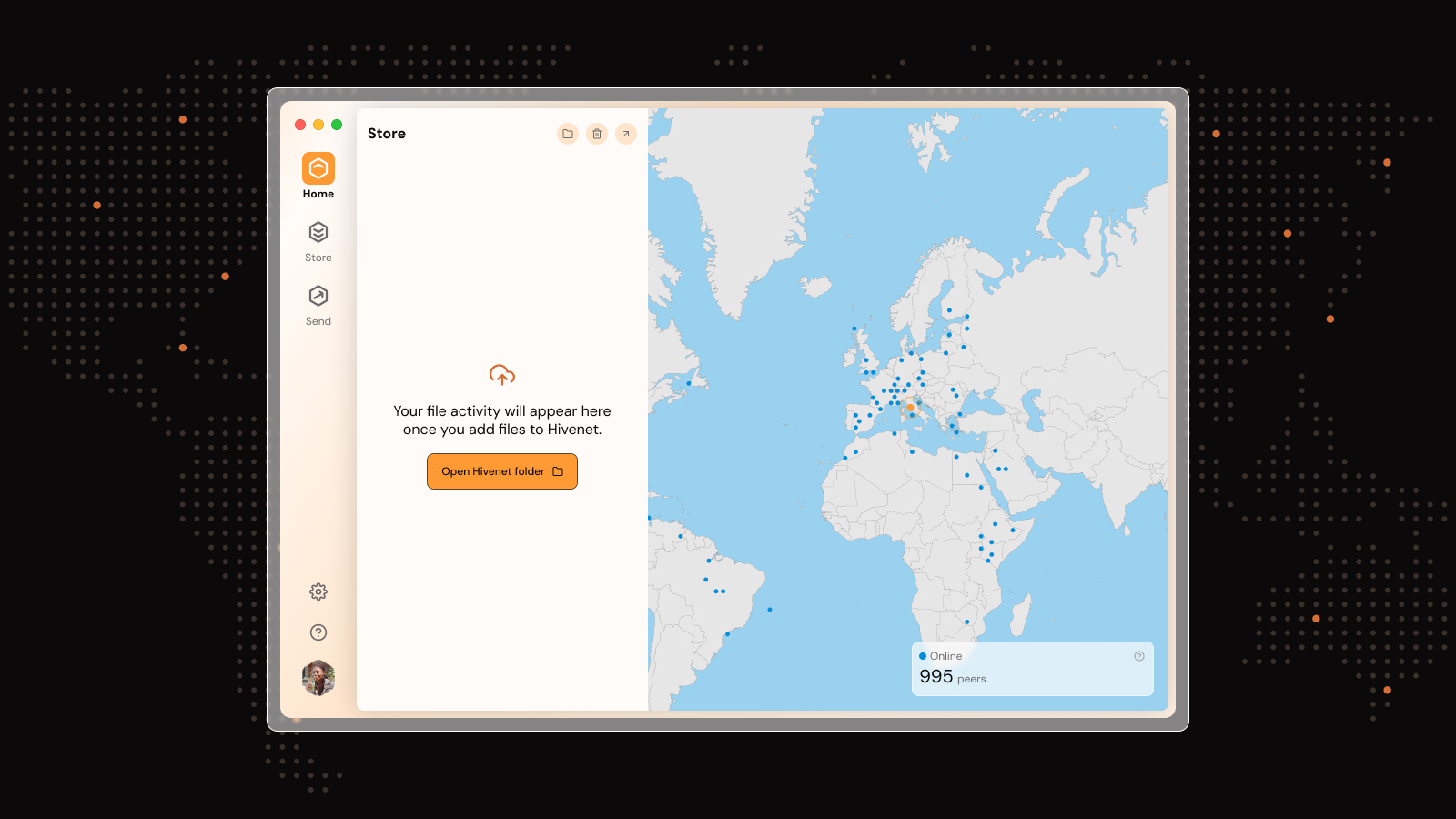

Peers in Hivenet's P2P network have the same role but exhibit different behaviors. Usage patterns and availability vary throughout the day, between peers, and across geographies. Hivenet learns each peer's behavior and optimally places each shard to ensure data can always be reconstructed when needed.

Ensuring data persistence in P2P networks

While forward error correction can mitigate unavailable peers, some peers may experience permanent hardware failures. Peers that haven't connected for a long time or fail to prove they have valid data are marked as failed peers. Hivenet's P2P network will start reconstructing its data elsewhere.

In conclusion, P2P networks can guarantee data availability in a heterogeneous, rapidly evolving group of peers by leveraging various strategies. Utilizing the advantage of numbers, these networks provide statistical cloud storage, ensuring data reliability and availability.

How does the system handle security concerns, particularly with data being distributed across numerous peers?

For handling security concerns, particularly with data distribution among numerous peers, Hivenet takes a comprehensive approach to ensure data privacy and security. Before data is uploaded to the network, it undergoes a process of division into chunks, compression, and encryption using secure algorithms. This process ensures that data remains confidential and secure, with only the data owner having the capability to decrypt and access the original information. Moreover, the system's design allows for data retrieval with a zero-knowledge protocol, ensuring that even during the decryption and reconstruction phase, the security of the data is maintained.

How does the process of regenerating missing shards work in detail?

To regenerate missing shards in a P2P network, Hivenet employs a process where files are initially split into several shards, with extra shards created to account for potential node failures or departures. This method ensures that even if some nodes become unavailable, the network can still reconstruct the original file using the remaining shards. This system is designed to operate with a redundancy margin, so that a full file reconstruction is possible with only a subset of the total shards.

What are the specific challenges or limitations of using forward error correction in P2P networks?

Forward error correction in P2P networks like Hivenet faces challenges such as managing the additional storage and bandwidth required for the redundancy data. This overhead is a critical factor since it directly impacts the network's efficiency and cost-effectiveness. Hivenet's implementation specifically addresses these challenges by optimizing the storage and transmission of data to minimize the extra resources needed for maintaining reliability and ensuring data availability.

Liked this article? You'll love Hivenet

You scrolled this far. Might as well join us.

Secure, affordable, and sustainable cloud services—powered by people, not data centers.