Accelerated File Transfer: Complete Guide to Fast, Secure Data Movement

Moving a 50GB video file from New York to Tokyo used to take hours. Now it takes minutes. The difference lies in accelerated file transfer technology—a specialized approach that bypasses traditional protocol limitations to maximize your network’s potential.

Standard FTP and HTTP transfers often use less than 20% of available bandwidth, especially over long distances. Accelerated file transfer solutions can push that utilization to 95%, delivering transfer speeds up to 100 times faster than conventional methods. This isn’t just about speed—it’s about transforming how organizations move sensitive data across global networks. For instance, Raysync offers high-speed file transfer without additional charges for transfer volume, making it a cost-effective solution for businesses. Additionally, Raysync, as an enterprise file transfer solution, offers scalable, secure, and high-speed file transfers. It can also transfer thousands of small files per second, ensuring efficiency in handling diverse data types.

What is Accelerated File Transfer

Accelerated file transfer technology optimizes data movement by replacing TCP-based protocols with custom solutions built on UDP foundations. While traditional file transfer methods like FTP and SFTP prioritize reliability over speed, accelerated solutions balance both by implementing advanced error correction and congestion control mechanisms. Raysync provides versatile synchronization options, including one-to-one and one-to-many, making it adaptable for various organizational needs. Some solutions also support many-to-many synchronization, allowing for more complex, multi-directional data relationships.

The core benefits include dramatic speed increases, enhanced resilience against network disruptions, and integrated security measures that meet compliance requirements. Organizations can transfer large files that previously took hours in just minutes, using the same physical network infrastructure. FileCatalyst, for example, provides accelerated file transfer solutions with 256-bit AES encryption, ensuring both speed and security. Sending large files securely often requires password protection for file transfers to ensure only intended recipients can access them. To further enhance security, users can customize the expiry date for each shared link, encouraging timely downloads and reducing the risk of unauthorized access.

Key Performance Metrics

Transfer speeds represent the most visible improvement. A 1GB file that takes 30 minutes via standard FTP can complete in under 3 minutes with acceleration technology. For large datasets, the time savings compound—multi-terabyte transfers that once required overnight windows now complete during normal business hours. Smash allows users to send large files without worrying about size limits, enabling transfers of any size and further enhancing productivity for organizations handling massive datasets. In addition, Smash supports unlimited file uploads, making it ideal for organizations with high-volume transfer needs. Smash also provides email notifications when files are sent and downloaded, ensuring users stay informed about the transfer process. Similarly, Masv offers unlimited file transfers with no file size limits, making it another excellent choice for businesses managing extensive data transfers.

Bandwidth utilization reaches 95% of available network capacity, compared to much lower percentages achieved by standard TCP-based protocols under high latency or packet loss conditions. This efficiency translates directly to cost savings and improved productivity for teams that regularly transfer large files.

Industries That Rely on Accelerated Transfers

Media and entertainment companies use accelerated file transfer for distributing 4K and 8K video content globally. Post-production workflows depend on moving raw footage, rendered sequences, and finished programs between studios, editors, and distributors without delay. Smash, a popular solution in this space, employs advanced encryption methods to ensure secure file transfers, which is critical for protecting valuable media assets. Smash securely uploads files to servers closest to the user to enhance transfer speed and allows users to send large files without size limits, making it ideal for media-heavy industries. Furthermore, Smash provides password protection and email notifications for its file transfers to ensure data security and keep users informed.

Healthcare organizations transfer medical imaging data, genomic datasets, and research files between facilities. These transfers often involve sensitive data requiring both speed and strict security compliance. Send with Hivenet, for instance, allows recipients to receive files without needing a Hivenet account, simplifying the process for healthcare professionals and collaborators. Additionally, users can send files via download links using Hivenet, making it a versatile tool for healthcare data sharing. Files transferred with Hivenet can remain available for up to 7 days, providing flexibility for healthcare teams managing time-sensitive data. Send with Hivenet also allows users to store files securely for a specified duration, ensuring accessibility and compliance with healthcare data policies.

Manufacturing companies distribute CAD files, design specifications, and supply chain data across global operations. Engineering teams collaborate on product development by sharing large design files that would otherwise create workflow bottlenecks.

Financial services firms use accelerated transfers for market data distribution, regulatory reporting, and secure backup operations where timing affects business outcomes.

How Accelerated File Transfer Works

Accelerated file transfer protocols move beyond TCP limitations by adopting UDP as their transport foundation. This shift enables custom flow control, error recovery, and bandwidth optimization mechanisms designed specifically for high-speed data movement. Resilio, for example, utilizes a peer-to-peer architecture for file transfers across multiple endpoints, enhancing both speed and reliability. The Resilio Platform also rapidly transfers large datasets across multiple locations and can sync files across environments in real time, supporting remote and distributed teams. It is optimized for high-volume data transfer between distributed environments, ensuring secure, efficient, and reliable movement of enterprise data.

UDP-Based Protocol Advantages

TCP was designed for reliability and congestion avoidance, but these features reduce throughput under high latency and packet loss conditions common in WAN environments. UDP provides a connectionless service that allows developers to build custom mechanisms for handling network challenges.

Most solutions employ a dual-channel architecture where the control channel uses TCP for session management and authentication, while the data channel uses UDP for high-speed file transfers. This approach combines reliability with performance optimization.

Parallel Data Streams and Packet Optimization

Large files get broken into multiple chunks and transmitted simultaneously over separate network threads. This parallelization dramatically increases throughput by utilizing multiple paths through the network infrastructure. Resilio can sync large files using a file chunking process for faster transfers.

Custom algorithms detect and compensate for packet loss and out-of-order delivery. When packets arrive damaged or missing, the system quickly identifies gaps and retransmits only the affected data, maintaining transfer momentum.

Network congestion detection adapts transfer rates in real-time, maximizing bandwidth without overwhelming network equipment. These systems monitor round-trip times, packet loss rates, and throughput metrics to optimize performance continuously.

Checkpoint Restart Functionality

Transfers interrupted by network failures can resume from the last successful checkpoint rather than starting over. The system tracks transfer progress in small increments, storing metadata about completed segments.

When connectivity resumes, only the remaining segments transfer, saving time and reducing network load. This feature proves essential for transferring large files over unreliable connections or during network maintenance windows.

Advanced Transfer Protocols

Several proprietary protocols demonstrate accelerated file transfer capabilities, each optimized for specific use cases and network conditions. These protocols are also designed to ensure secure file transfer for sensitive and high-value data.

Aspera FASP Technology

IBM’s Aspera uses its Fast, Adaptive, and Secure Protocol (FASP) to deliver transfers up to 100 times faster than TCP. The protocol optimizes bandwidth use regardless of latency or network quality, making it effective for transcontinental transfers.

FASP adapts to network conditions automatically, scaling transfer rates up or down based on available bandwidth and congestion levels. This adaptive behavior ensures optimal performance without manual tuning.

FileCatalyst’s Lightweight UDP Channels

FileCatalyst relies on proprietary UDP-based protocols to provide high-speed transfers with minimal overhead. The system supports direct browser and mobile access alongside enterprise features, making it accessible for diverse user scenarios.

The platform’s acceleration engine focuses on simplicity and effectiveness, reducing the complexity often associated with high-performance transfer solutions while maintaining enterprise-grade security and reliability.

IBM’s High-Speed Transfer Solutions

IBM offers multiple acceleration technologies beyond Aspera, including Sterling solutions designed for enterprise data exchange. These platforms integrate automatic bandwidth optimization with workflow management capabilities.

The solutions support petabyte-scale transfers while maintaining detailed audit trails and compliance reporting required in regulated industries.

Signiant’s Media-Focused Acceleration

Signiant’s acceleration engine targets media and entertainment workflows specifically. The platform integrates with existing production tools and supports hybrid deployment models that span on-premises and cloud environments.

The solution optimizes for video files, raw images, and other media formats common in broadcast and post-production workflows, with features like automatic format detection and workflow integration.

Network Optimization Features

Advanced accelerated file transfer solutions incorporate multiple optimization techniques that work together to maximize performance and reliability. For instance, GoFast uses lightweight UDP channels to broadcast data packets, enabling quick and efficient file transfers even under challenging network conditions. In addition to performance, these advanced optimization features also help protect against cyber threats by incorporating robust security measures, ensuring that sensitive data remains safe during transfer.

Adaptive Rate Control

These systems dynamically adjust transfer speeds to maximize available bandwidth without causing network congestion. Rate control algorithms monitor network performance indicators and adjust transmission rates in real-time.

The optimization process considers factors like round-trip time, packet loss rates, and available bandwidth to determine optimal transfer speeds. This adaptive approach ensures consistent performance across varying network conditions.

Automatic Retry and Error Correction

Reliable delivery over unreliable networks requires sophisticated error handling mechanisms. Accelerated transfer systems quickly identify lost or corrupted data packets and retransmit only the affected segments.

Forward error correction techniques add redundancy to transmitted data, enabling recovery from minor corruption without retransmission. This approach reduces the impact of network errors on overall transfer performance.

WAN Optimization Integration

Many solutions integrate compression, deduplication, and caching to minimize data transmission requirements. These techniques reduce the actual amount of data crossing the network while maintaining file integrity.

Compression algorithms optimize for different file types, applying appropriate techniques for video, images, documents, and other data formats. Deduplication identifies and eliminates redundant data segments across multiple files.

Multi-Path Transfer Capabilities

Some platforms aggregate bandwidth from multiple network connections simultaneously. This capability proves valuable in environments with multiple internet connections or MPLS circuits.

Load balancing across multiple paths increases total available bandwidth while providing redundancy. If one path fails, transfers continue using remaining connections without interruption.

Benefits of Accelerated File Transfer

The primary advantage centers on dramatically reduced transfer times for large files. Organizations report completing transfers that previously took hours in just minutes using the same network infrastructure. Accelerated file transfer solutions also help prevent data loss by enabling faster and more reliable backup operations.

Significant Transfer Time Reductions

A 10GB video file that requires 2 hours via standard FTP can complete in 10-15 minutes with acceleration technology. For organizations regularly moving large datasets, these time savings translate to improved productivity and reduced operational costs.

Peak performance scenarios demonstrate even greater improvements. Under optimal conditions, transfers complete 10 to 100 times faster than standard methods, with actual results depending on file size, network conditions, and distance.

Reliable Delivery Over Long Distances

High-latency networks traditionally challenge TCP-based transfers, but accelerated solutions maintain performance across transcontinental and satellite connections. Custom protocols adapt to network characteristics rather than being limited by them.

Error recovery mechanisms ensure delivery even when packet loss rates reach levels that would cripple standard transfers. This reliability enables organizations to depend on wide-area transfers for business-critical workflows.

Cost Savings Through Efficiency

Shorter transfer windows reduce operational costs by improving staff productivity and reducing bandwidth consumption during peak hours. Teams can complete file transfers during normal business hours rather than scheduling overnight operations.

Organizations report significant cost savings when migrating from traditional transfer methods to accelerated solutions, particularly for high-volume, time-sensitive workflows.

Enhanced Security with Performance

Modern accelerated transfer solutions implement AES-256 encryption and integrity verification without sacrificing performance. End-to-end encryption protects sensitive data throughout the transfer process.

Authentication mechanisms, access controls, and audit logging meet regulatory requirements for industries handling protected data. These security features integrate seamlessly with acceleration technology.

Performance Improvements

Real-world performance metrics demonstrate the practical benefits of accelerated file transfer technology across different scenarios and network conditions.

Bandwidth Utilization Efficiency

Accelerated solutions typically achieve 95% bandwidth utilization compared to 10-20% common with standard TCP transfers over high-latency connections. This efficiency improvement directly translates to faster completion times.

The utilization advantage becomes more pronounced as network latency increases. Transcontinental transfers show the greatest performance improvements due to TCP’s sensitivity to round-trip time delays.

Multi-File Transfer Capabilities

Simultaneous transfers of multiple files maintain individual file transfer speeds without degradation. Organizations can process entire project folders or dataset collections in parallel rather than sequentially.

System memory management and multiple threads enable concurrent transfers while maintaining data integrity and preventing resource conflicts. Users can transfer multiple files as efficiently as single file operations.

Reduced Latency Impact

High latency networks severely impact traditional transfer protocols, but accelerated solutions minimize these effects through custom congestion control and packet management techniques.

Satellite connections, transcontinental links, and other high-latency scenarios benefit most from acceleration technology. Performance remains consistent regardless of geographical distance.

Business Impact

Accelerated file transfer technology creates measurable business value across multiple dimensions beyond simple time savings.

Faster Time-to-Market

Media companies can distribute content globally in minutes rather than hours, enabling same-day release schedules across multiple time zones. This capability directly impacts revenue potential for time-sensitive content.

Product development teams share design files and collaborate in real-time rather than waiting for overnight transfers. Engineering iterations accelerate when large datasets move quickly between team members.

Improved Remote Collaboration

Remote teams can share large files and datasets as if working in the same location. Real-time collaboration becomes possible for workflows that previously required physical media shipping or overnight transfer windows.

Cloud computing integration enables hybrid workflows where on-premises systems and cloud platforms exchange data seamlessly. Teams can leverage cloud processing power without transfer bottlenecks.

Enhanced Disaster Recovery

Reduced backup windows improve business continuity by enabling more frequent data protection operations. Organizations can replicate larger datasets within acceptable recovery time objectives.

Multiple sites can maintain synchronized data through efficient replication, improving disaster recovery capabilities while reducing the time required for regular backup operations.

Top Accelerated File Transfer Solutions

The market includes enterprise platforms, cloud-native solutions, and hybrid deployment options, each optimized for different use cases and organizational requirements.

Enterprise-Grade Platforms

Aspera (IBM) provides petabyte-scale transfer capabilities with comprehensive automation, command-line tools, and desktop clients. The platform supports both on-premises and cloud deployments with extensive API integration.

Signiant offers workflow integration specifically designed for broadcast and media organizations. The platform supports hybrid deployments and includes features like automated transcoding and content distribution.

FileCatalyst provides direct browser and mobile application support alongside enterprise features. The solution focuses on ease of use while maintaining high-performance transfer capabilities.

IBM Sterling delivers enterprise data exchange with strong compliance focus and integration with existing business processes and workflow management systems.

Cloud-Native Solutions

AWS DataSync provides managed transfer services optimized for moving data between on-premises storage and AWS cloud services. The service includes bandwidth throttling and transfer scheduling capabilities.

Azure File Sync enables hybrid cloud scenarios where on-premises file servers synchronize with Azure cloud sync storage. The service supports multiple endpoints and automatic conflict resolution.

Google Transfer Service facilitates data movement between cloud platforms and on-premises environments with support for large-scale migration projects and ongoing synchronization. Additionally, Google Drive integrates with other Google services for enhanced functionality, making it a seamless option for organizations already using the Google ecosystem.

Pricing Models and Considerations

Enterprise solutions typically offer subscription licensing based on concurrent users, transfer volumes, or site licenses. Pricing varies significantly based on features, support levels, and deployment models.

Cloud transfer services often use consumption-based pricing starting around $0.25 per GB transferred. Many organizations find predictable monthly costs easier to budget than per-transfer fees.

Users pay different amounts based on transfer volumes, with bulk transfers often receiving volume discounts. Organizations should evaluate total cost of ownership including setup, training, and ongoing operational expenses.

Implementation Best Practices

Successful deployment requires careful planning across network infrastructure, security configuration, and user adoption strategies.

Network Assessment and Planning

Comprehensive bandwidth planning ensures adequate capacity for peak transfer periods without impacting other network traffic. Organizations should assess current utilization patterns and plan for growth.

Quality of Service (QoS) policies can prioritize transfer traffic during business hours while allowing background transfers during off-peak periods. Network monitoring helps identify bottlenecks and optimization opportunities.

Firewall and security configurations must accommodate UDP-based protocols while maintaining security policies. Many organizations require specific port configurations and traffic inspection policies.

Security Configuration Requirements

End-to-end encryption using AES-256 and TLS 1.3 protocols protects data in transit without impacting transfer performance. Security policies should address both data protection and access control requirements.

Multi-factor authentication and role-based access controls prevent unauthorized access while enabling legitimate users to transfer files efficiently. Integration with existing identity management systems simplifies user administration.

Audit logging and compliance reporting capabilities meet regulatory requirements while providing visibility into transfer activities. Organizations can track who transferred what files when and where.

Integration with Existing Workflows

API integration enables automated transfers triggered by business processes, content management systems, or workflow engines. This automation reduces manual effort and improves consistency.

Seamless integration with existing tools and processes encourages user adoption while reducing training requirements. Organizations should plan integration strategies before deployment.

User training and adoption strategies maximize return on investment by ensuring teams understand new capabilities and best practices for different transfer scenarios.

Technical Configuration

Proper technical setup ensures optimal performance while maintaining security and reliability requirements.

Firewall and Network Security

UDP-based acceleration protocols require specific firewall configurations to allow traffic on designated ports. Security teams should understand protocol requirements while maintaining protection policies.

Network address translation (NAT) and firewall traversal capabilities enable transfers across complex network topologies without compromising security or requiring extensive infrastructure changes.

Intrusion detection and prevention systems should be configured to recognize legitimate transfer traffic while continuing to protect against threats and unauthorized access attempts.

Monitoring and Performance Management

Comprehensive monitoring systems track transfer performance, completion rates, and error conditions. Real-time alerts notify administrators of performance degradation or failed transfers.

Performance metrics help identify optimization opportunities and capacity planning requirements. Organizations can track trends and plan infrastructure improvements based on actual usage patterns.

Backup and redundancy planning ensures transfer infrastructure remains available for critical workloads. High-availability configurations prevent single points of failure.

System Requirements and Optimization

System memory requirements vary based on concurrent transfer volumes and file sizes. Adequate RAM ensures optimal performance for multiple threads and parallel processing operations.

Storage subsystem performance affects overall transfer speeds, particularly for reading source files and writing destination data. SSD storage provides better performance than traditional disk systems.

Operating system tuning optimizes network buffer sizes, TCP/UDP parameters, and file system performance for large file operations. These optimizations complement acceleration protocol capabilities.

Use Cases and Industry Applications

Real-world applications demonstrate the practical value of accelerated file transfer across diverse industries and workflow scenarios.

Media and Entertainment Applications

4K and 8K video distribution requires moving massive files between production facilities, post-production houses, and distribution networks. Standard transfer methods create bottlenecks that delay content delivery.

Post-production workflows involve moving raw footage, intermediate files, and finished content between editing systems, color correction suites, and rendering farms. Teams need to transfer files quickly to maintain production schedules.

Global content distribution enables same-day releases across multiple markets by moving finished programs from production facilities to broadcast networks and streaming platforms worldwide.

Live event coverage requires rapid transfer of video content from remote locations to broadcast facilities for immediate editing and distribution.

Healthcare Data Management

Medical imaging transfers include MRI scans, CT images, and other diagnostic data that can reach multi-gigabyte sizes per patient. Healthcare facilities need to share this data quickly for consultations and treatment planning.

Genomic research generates massive datasets that require secure sharing between research institutions, laboratories, and clinical facilities while maintaining patient privacy and regulatory compliance.

Telemedicine applications depend on transferring high-resolution medical images and patient data between remote clinics and specialist centers for diagnosis and treatment planning.

Manufacturing and Design Collaboration

CAD file distribution involves sharing complex 3D models, simulation data, and design specifications between engineering teams, suppliers, and manufacturing facilities worldwide.

Supply chain collaboration requires moving product specifications, quality control data, and manufacturing instructions between multiple organizations and locations.

Product development cycles accelerate when engineering teams can share large design files and simulation results quickly, enabling faster iteration and improved collaboration.

Financial Services Applications

Trading data replication ensures market information reaches multiple locations simultaneously for real-time decision making and regulatory compliance.

Regulatory reporting requires secure, timely delivery of large datasets to government agencies and regulatory bodies within strict deadlines.

Backup and disaster recovery operations for financial institutions involve moving large volumes of transaction data and customer information to secure off-site locations.

Risk management systems require rapid data sharing between trading floors, risk management centers, and regulatory reporting systems to maintain compliance and operational control.

Future of Accelerated File Transfer

Emerging technologies and changing network landscapes continue to drive innovation in accelerated file transfer solutions.

5G Network Integration

5G networks enable mobile high-speed transfers from field locations, remote production sites, and temporary facilities. Low latency and high bandwidth support real-time collaboration scenarios previously impossible with mobile connections.

Edge computing integration allows local acceleration and caching at 5G network edges, reducing latency and improving performance for mobile and IoT applications.

Mobile devices can now participate in high-speed transfer workflows, enabling field crews to upload large datasets and video files directly from capture locations.

AI-Powered Network Optimization

Machine learning algorithms analyze network conditions in real-time and adapt transfer strategies automatically. These systems learn from historical performance data to predict and prevent transfer issues.

Predictive optimization anticipates network congestion and adjusts transfer schedules or routing to maintain performance. AI systems can identify patterns that human administrators might miss.

Automated troubleshooting capabilities identify and resolve transfer issues without human intervention, reducing downtime and improving reliability for business-critical workflows.

Edge Computing and Localized Acceleration

Edge computing deployments enable localized acceleration and caching closer to data sources and destinations. This approach reduces backbone network traffic while improving performance.

Content delivery networks (CDN) integration enables hybrid acceleration where initial transfers reach edge locations quickly, then distribute to final destinations using optimized local networks.

IoT and sensor data collection benefits from edge acceleration when large datasets need movement from remote locations to central processing facilities.

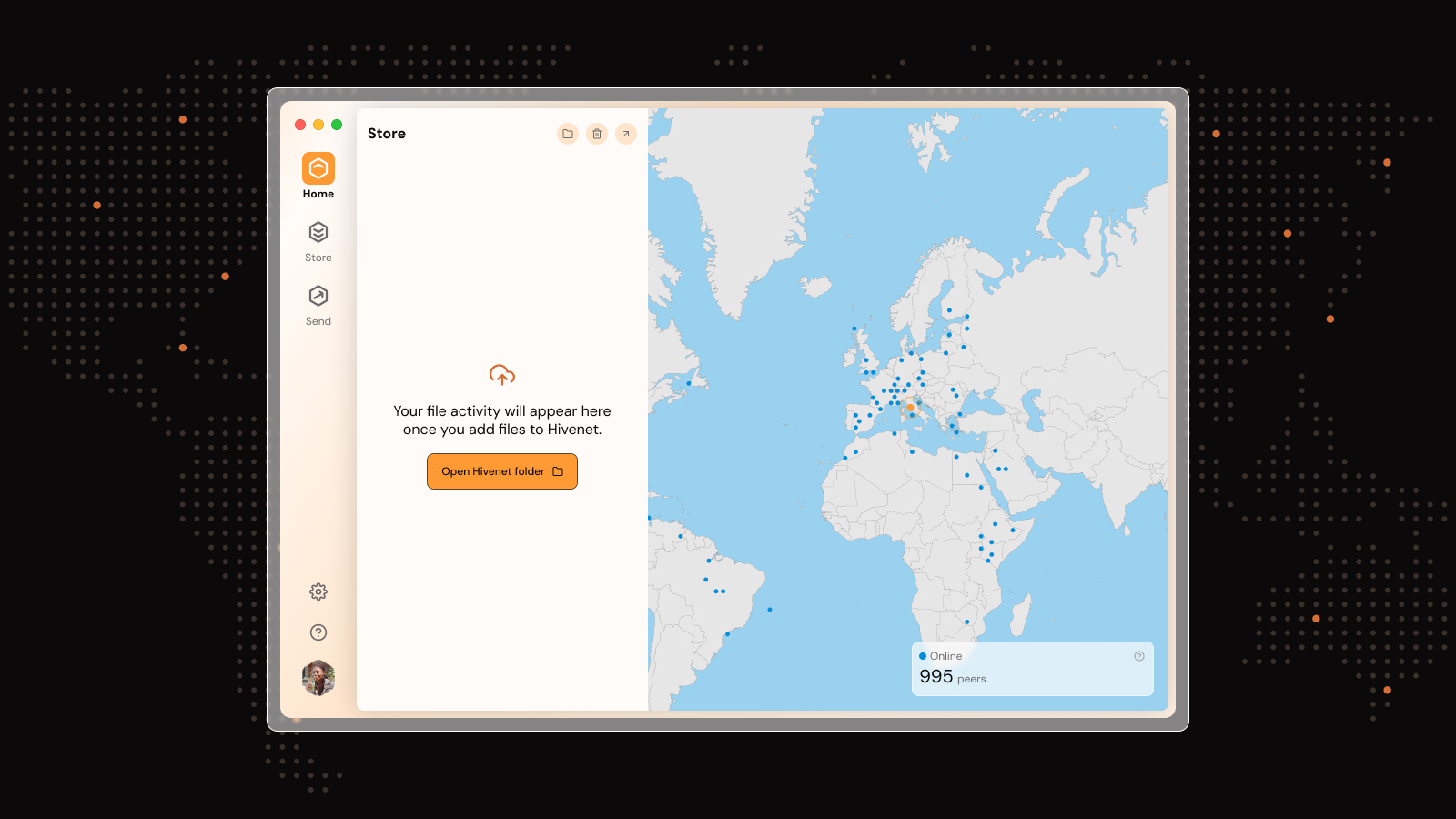

Hivenet serves as a primary distributed cloud infrastructure, providing a robust platform that supports accelerated file transfer through its decentralized network, enhancing speed, reliability, and scalability for global data movement.

Quantum-Safe Encryption Preparation

Post-quantum cryptography research prepares for future security requirements when current encryption methods may become vulnerable to quantum computing attacks.

Security protocol evolution ensures long-term data protection even as computing capabilities advance. Organizations can prepare for future threats while maintaining current security standards.

Compliance frameworks continue evolving to address new security challenges and ensure accelerated transfer solutions meet future regulatory requirements.

Getting Started with Accelerated File Transfer

Organizations considering accelerated file transfer solutions should evaluate current transfer bottlenecks, assess workflow requirements, and plan implementation strategies that maximize return on investment.

Start by identifying the largest pain points in your current file transfer process. Calculate time spent on transfers, measure bandwidth utilization, and document workflow delays caused by slow data movement.

Evaluate different solutions based on your specific requirements for file sizes, security needs, integration capabilities, and budget constraints. Consider both immediate needs and future growth requirements.

Plan pilot implementations that demonstrate value while minimizing risk. Select use cases that showcase clear benefits and build support for broader deployment across your organization.

The transformation from hours-long transfers to minutes represents more than technical improvement—it enables new ways of working, collaborating, and competing in data-driven markets. Organizations that embrace accelerated file transfer technology position themselves to move faster, work more efficiently, and respond more quickly to changing business requirements.

Frequently Asked Questions (FAQ) about Accelerated File Transfer

What is accelerated file transfer?

Accelerated file transfer is a technology that optimizes the movement of data by using advanced protocols, typically based on UDP, to bypass the speed limitations of traditional TCP-based transfers. This results in much faster transfer speeds, especially over high-latency or unreliable networks.

How does accelerated file transfer differ from standard FTP or HTTP transfers?

Standard FTP and HTTP transfers rely on TCP, which prioritizes reliability but often underutilizes bandwidth, especially over long distances. Accelerated file transfer uses custom protocols with error correction and congestion control to maximize bandwidth utilization and speed up data movement.

Can I transfer large files with accelerated file transfer?

Yes, accelerated file transfer solutions are specifically designed to handle large file transfers efficiently, including files in the gigabyte and terabyte range.

Is accelerated file transfer secure?

Most accelerated file transfer solutions incorporate enhanced security features such as AES-256 encryption, end-to-end encryption, authentication, access controls, and integrity checks to ensure sensitive data is protected during transfer.

What happens if my transfer is interrupted?

Accelerated file transfer solutions often include checkpoint restart functionality, allowing interrupted transfers to resume from the last successful point rather than starting over, saving time and bandwidth.

Can I transfer multiple files simultaneously?

Yes, accelerated file transfer supports parallel data streams and multi-file transfers, enabling multiple files to be sent concurrently without sacrificing speed or data integrity.

Do accelerated file transfer solutions support cloud platforms?

Many accelerated file transfer solutions integrate seamlessly with popular cloud platforms such as Google Cloud Platform, AWS, and Azure, facilitating hybrid workflows and cloud-based storage.

Are there file size limits for accelerated file transfer?

Typically, these solutions support very large file sizes with minimal or no file size limits, making them ideal for transferring high-resolution video files, raw images, and large datasets.

Can I password protect my file transfers?

Yes, many solutions offer password protection and other access controls to secure file transfers and restrict unauthorized access.

How can I monitor my file transfers?

Advanced solutions provide granular control and monitoring features, including email notifications, transfer status updates, and detailed audit logs to track transfer progress and completion.

Is accelerated file transfer suitable for small files?

While optimized for large files, accelerated file transfer solutions can also efficiently handle bulk transfers of many small files without compromising performance.

What industries benefit most from accelerated file transfer?

Industries such as media and entertainment, healthcare, manufacturing, engineering, and financial services rely heavily on accelerated file transfer to move large, sensitive datasets quickly and securely.

How does accelerated file transfer impact productivity?

By significantly reducing transfer times and improving reliability, accelerated file transfer increases productivity by enabling faster collaboration, quicker time-to-market, and more efficient workflows.

Can I use accelerated file transfer over Wi-Fi or mobile networks?

Yes, many solutions are optimized to perform well over various networks, including Wi-Fi, 4G, 5G, and other mobile connections, adapting to network conditions to maintain speed and reliability.

What is the environmental impact of accelerated file transfer?

Faster transfers reduce the time and energy required for data movement, contributing to lower carbon emissions and a smaller environmental footprint compared to slower, less efficient methods.

How do I get started with accelerated file transfer?

Begin by assessing your current file transfer challenges, defining your security and performance needs, and evaluating solutions that fit your organizational requirements. Pilot testing can help demonstrate value before full deployment.

Liked this article? You'll love Hivenet

You scrolled this far. Might as well join us.

Secure, affordable, and sustainable cloud services—powered by people, not data centers.